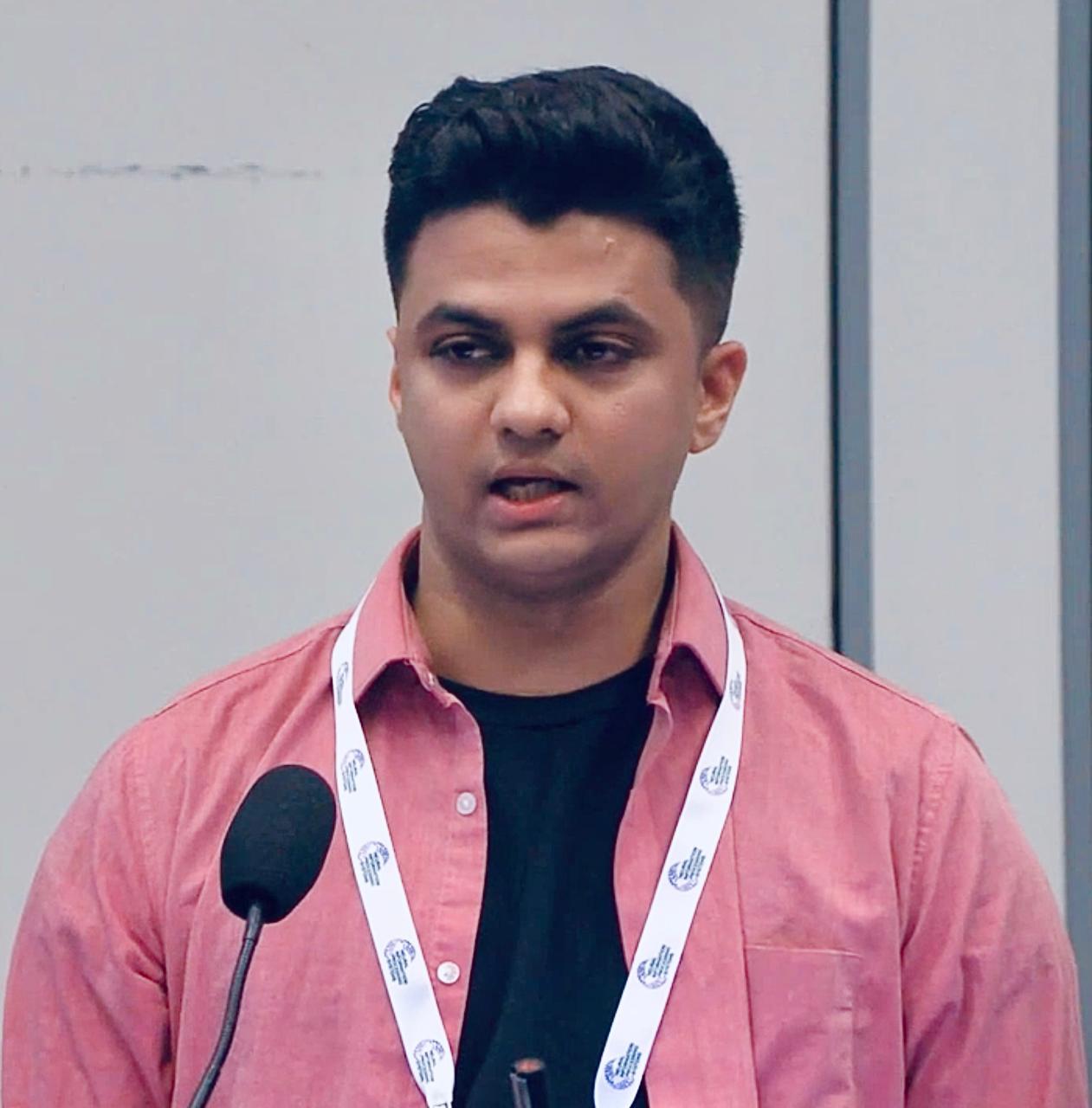

Arjun Ashok

I am a Visiting Researcher (Full-Time) at ServiceNow Research, Montreal, Canada and a PhD student at MILA-Quebec AI Institute and Université de Montréal advised by Irina Rish and Alexandre Drouin. My research interests are in time series forecasting. My current work focuses on integrating unstructured contextual information into forecasting algorithms, to enable more accurate forecasts and to support more complex tasks beyond traditional forecasting (such as scenario planning).

My email address is arjun.ashok.psg [at] gmail [dot] com. Email me if you'd like to connect!

News

| Aug '25 | One paper out on arXiv, on strategies for improved zero-shot context-aided forecasting with LLMs. |

| May '25 | Context is Key is accepted for publication at ICML 2025. Another paper accepted at the workshop on Foundation Models for Structured Data. |

| Dec '24 | Co-organized The first NeurIPS workshop on Time Series in the Age of Large Models at NeurIPS 2024 in Vancouver, Canada, with 1000+ attendees. Checkout all the papers and talks from the workshop here. |

| Oct-Nov '24 | Paper on natural-language based context-aware forecasting, Context is Key: A Benchmark for Forecasting with Essential Textual Information, is out on arXiv. Gave an oral presentation at the FM4TS Workshop at ACM ICAIF 2024, New York, USA. |

| July '24 | Gave an invited talk on Natural Language based Context-Aware Forecasting at the International Symposium on Forecasting (ISF) 2024. |

| May '24 | Presented TACTiS-2 at ICLR 2024. TACTiS-2 is a highly flexible model for multivariate probabilistic time series prediction tasks. Check out the tweet thread and poster here! |

| Feb '24 | The full version of Lag-Llama released with open-source model checkpoints! Check the announcement here! |

| Jan '24 | I gave a talk on our efforts Towards General-Purpose Models for Time-Series Prediction at the Winter 2024 Montreal Time Series Meetup. |

| Jan '24 | TACTiS-2 accepted at ICLR 2024! |

| Dec '23 | I gave a talk on Building Foundation Models for Time Series Data at the 6th workshop on Neural Scaling Laws co-located with NeurIPS 2023. |

| Oct '23 | TACTiS-2 is out on arXiv. |

| Oct '23 | A preliminary version of Lag-Llama is out on arXiv. |

| Jan '23 | One paper on out-of-distribution detection accepted to ICLR 2023. This is work in collaboration with folks at ML Collective mentored by Rosanne Liu. |

| Jan '23 | Started as a Visiting Researcher (Full-Time) at ServiceNow Research, Montreal. Excited to continue working on problems in time series representation learning! |

| Aug '22 | Preliminary work on self-supervised learning objectives for weather time series accepted at the AAAI 2022 Fall Symposium on Climate Change. |

| Jul '22 | One paper on Class-Incremental Learning accepted as a full paper at ECCV 2022. |

| Jun '22 | Started as a Research Intern at IBM Research, India. I'll be working on building self-supervised learning objectives and pre-trained models for geospatial weather time series. |

| Jun '22 | One paper on cross-task generalization in NLP submitted to EMNLP 2022 (Update: Accepted). |

| Apr '22 | One paper on Class-Incremental Learning accepted at the CLVISION Workshop at CVPR 2022 as a non-archival paper (Update: Accepted at ECCV 2022). |

| Apr '22 | One reproducibility report on Self-Supervision and Few-shot Learning accepted at the ML Reproducibility Challenge 2021 (Fall Edition) and published at ReScience-C. |

| Oct '21 | One paper on out-of-distribution generalization accepted as AAAI 2022 as a student abstract. |

| Jun '21 | Started as a Research Assistant at IIT Hyderabad under Prof. Vineeth Balasubramanian. |

Selected Papers

Beyond Naïve Prompting: Strategies for Improved Zero-shot Context-aided Forecasting with LLMs

Arjun Ashok, Andrew Robert Williams, Vincent Zhihao Zheng, Irina Rish, Nicolas Chapados, Étienne Marcotte, Valentina Zantedeschi, Alexandre DrouinPreprint; under review.

Context is Key: A Benchmark for Forecasting with Essential Textual Information

Arjun Ashok*, Andrew Robert Williams*, Étienne Marcotte, Valentina Zantedeschi, Jithendaraa Subramanian, Roland Riachi, James Requeima, Alexandre Lacoste, Irina Rish, Nicolas Chapados, Alexandre Drouin(* Co-first authorship)

Accepted at ICML 2025.

Workshop Presentations:

Poster Presentation at NeurIPS 2024 Workshop on Time Series in the Age of Large Models

Oral Presentation at ACM ICAIF 2024 Workshop on Foundation Models for Time Series: Exploring New Frontiers

Poster Presentation at Montreal AI Symposium 2024

TL;DR Abstract arXiv Code Benchmark Visualization Tweet

TACTiS-2: Better, Faster, Simpler Attentional Copulas for Multivariate Time Series

Arjun Ashok, Étienne Marcotte, Valentina Zantedeschi, Nicolas Chapados, Alexandre DrouinAccepted at ICLR 2024.

Workshop Presentations:

Oral Presentation at Montreal AI Symposium 2024

TL;DR Abstract arXiv Code OpenReview Tweet Poster Blog 15-min Video

Lag-Llama: Towards Foundation Models for Time Series Forecasting

Arjun Ashok*, Kashif Rasul*, Andrew Robert Williams, Hena Ghonia, Rishika Bhagwatkar, Arian Khorasani, Mohammad Javad Darvishi Bayazi, George Adamopoulos, Roland Riachi, Nadhir Hassen, Marin Biloš, Sahil Garg, Anderson Schneider, Nicolas Chapados, Alexandre Drouin, Valentina Zantedeschi, Yuriy Nevmyvaka, Irina Rish(* Co-first authorship)

Workshop Presentations:

Poster Presentation at NeurIPS 2023 Workshop on Robustness of Few-shot and Zero-shot Learning in Foundation Models

TL;DR Abstract Paper Code (1k+ ★) Weights Demo Tweet 15-min Video

Previous Work

I previously worked on problems in out-of-distribution generalization, continual learning, and few-shot learning, spanning the domains of computer vision and natural language processing. Please check my Google Scholar for a list of previous publications.Invited Talks

- Context-Aided Forecasting: Progress So Far and Next Big Challenges.

IIF Workshop on Open-Source Forecasting, Beijing, China, June 2025 - Context is Key: A Benchmark for Forecasting with Essential Textual Information.

Morgan Stanley, New York, Nov 2024.

Bombardier Inc., Virtual, Nov 2024. - Zero-Shot Forecasting with Natural Language Contextualization.

International Symposium On Forecasting (ISF) 2024, Dijon, France, July 2024. - Lag-Llama: Towards Foundation Models for Time Series Forecasting.

AI @ Scale Workshop, Mila-Quebec, Montreal, Mar 2024. - Towards General-Purpose Models for Time-Series Prediction.

The Winter 2024 Montreal Time Series Meetup, Montreal, Jan 2024. - Building Foundation Models for Time Series Data.

The 6th workshop on Neural Scaling Laws co-located with NeurIPS 2023, Virtual, Dec 2023.

Academic Service

- Co-organizer of The first NeurIPS workshop on Time Series in the Age of Large Models at NeurIPS 2024.

- Served as a reviewer at: ICML 2025, ICLR 2025, ICLR 2024, NeurIPS 2023, AISTATS 2022, AISTATS 2021, CVPR 2022, CVPR 2021, TMLR, TPAMI.

- Organized the ICLR 2024 Time Series Meetup in Vienna in May 2024.

Misc

| On the side, I am a Carnatic Musician and a student of Vidwan Bharat Sundar. I have performed Carnatic concerts in multiple venues in India, and continue to perform in and around Montréal and Ottawa regularly. Here is a recording of a concert of mine from July 2024. I'm also a hybrid athelete (I enjoy running, biking and working out), and enjoy reading non-fiction. |

|